Hollywood Asshole

An Open Letter to Mark Ruffalo – one of the most outspoken, over pampered and least educated of the bunch. Him, Deniro, Springsteen and Rosie are the leading TDS tards. This guy is also lying a lot. It’s too bad as I like the Avengers, just not in real life

Iran

At least 12,000 killed in Iran crackdown as blackout deepens – This isn’t going to end well

Dilbert

R.I.P. – Dilbert Creator and Early Trump Supporter Scott Adams Passes Away at 68 – too bad. I worked with people at IBM who worked with him, Alice, Wally and the pointy haired boss at Pc Bell

Economy

Inflation Holds at Nearly Half Biden Era Rate, ‘Core’ Rate Lowest Since March 2021

Artificial Intelligence

Even Meta and Microsoft Engineers Ditch Company AI for Claude – because ChatGPT/OpenAI, Gemini and most of the rest of the AI engines are crap. I can’t personally vouch for Claude, but I know the others are as biased as Google is

College Sports

8 Of The Largest Individual College Sports Donations In 2025 – It seems like a better use of the money to put it towards academics, as much as I like watching sports. We’d be graduating less retards that way

Guy Stuff

Looking For Evidence That Dudes Rock? Here Is The World Record For Farthest Golf Shot Landed Into A Moving Car – I still throw stuff behind my back and add difficulty to any sports stuff to make the victory sweeter.

The 11 Teams Responsible For The Longest Playoff Losing Streaks In NFL History

Climate Hoax

Axios: ‘The world’s great climate collapse’: ‘The climate agenda’s fall from grace over the past year has been stunning — in speed, scale & scope’ – you can only tell a lie so long, and then you are the boy who cried wolf. I’m looking at you Al Gore

Defying the Law

Bill Clinton Defies Epstein Subpoena, Risking Contempt Of Congress – So what? They aren’t going to do anything and can’t throw him in jail. He’s making a mockery of Congress. In a way, he’s also admitting guilt as a pedo by not going

Our Current Bane of Existance

Confessions of a Recovering Liberal White Woman – Worse than Karens, they are mentally ill and are oug to hurt families, the country and ultimately themselves. How do we rid our lives from these creatures.

Cars

2017 Ferrari LaFerrari Aperta – 96 Miles, 1 of 210 Produced, 6.3L/949 HP Hybrid-Drive V-12

so bad it’s not even legal to drive on the street.

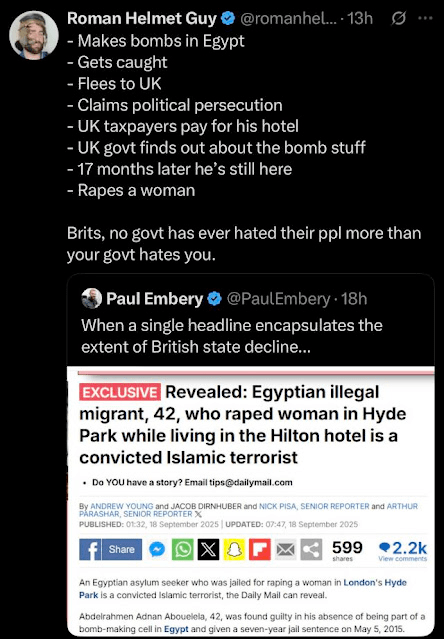

Muslim Rape Gangs

Islam’s Rape Gangs — As Instructed in the Mosques – these guys all look related. This is reason 956 why we shouldn’t let them in.

Mother of the year

California mom convicted of murder for letting toddler drown while she chatted with men on dating apps… – look a that alcohol blood level